Neural network in C# with multicore parallelization / MNIST digits demo

I've been working for a couple weeks on building my first fully functional artificial neural network (ANN). I'm not blazing any news trails here by doing so. I'm a software engineer. I can barely follow the mathematical explanations of how ANNs work. For the most part I have turned to the source code others have shared online for inspiration. In most cases I've struggled to understand even that, despite programming for a living.

Part of the challenge is that more than a few of those demos have surprised me by being nonfunctional. They did something for sure. They just didn't learn anything or perform significantly better than random chance making correct predictions, no matter how many iterations they went through. Or they had bugs that prevented them from working according to the well-worn basic backpropagation algorithm.

I mostly worked from C# examples when I could find them. One thing that was a genuine struggle for me to deal with is my sense that they all derived from one source from a decade and a half ago that itself had bugs. And which struck me as poorly structured to begin with. In short, I found it hard to read most of the source code samples I found because they were written in cryptic ways in my opinion. Along the way I wrote and rewrote from scratch. If I couldn't duplicate what was contained in one demo I might download and run it directly within my project. And usually I would find it wouldn't work for one reason or another. I was amazed that people blogged about the subject without apparently confirming that their own code worked properly.

For a while I was very frustrated because I was seeing a strange behavior nobody else had documented. My models would train and get very good. And then their accuracy rates would start falling off as though rolling down the other side of a hill. I spent over a week trying to figure out the cause. Ultimately I discovered an extra loop in my code that influenced training in a way that didn't demolish it completely, but which somehow compounded after a while to eventually undo all of the training. Once I fixed that I immediately started seeing my code behaving like everybody else's. Hooray!

I know there are lots of code samples out there already. But here is my own. My previous blog post showed a simplified version of this. Short enough to paste directly on the page. In this case I'm going to instead point you to a Github repository with my complete program in it:

I'm also going to skip trying to write an extensive explanation of how an ANN works, including the backpropagation algorithm. I think that has been very well covered on so many other websites that I would have little more of value to add. So I'll just tell you a little more about what's in my demo code.

For starters, my demo has a solidly OOP structure. The very reusable basis is a set of NeuralNetwork, Layer, and Neuron classes. These classes are well oriented toward the basics of both training and later practical use. NeuralNetwork features .FromJson() and .ToJson() methods for serialization of the trained state of a model. The layers can be separately configured to use different learning rates and activation functions, including Softmax, TanH, Sigmoid, ReLU, and LReLU. You can have as many hidden layers as you want too. NeuralNetwork offers various ways to inject input values and get your output, including the .Classify() method, which gives you an integer representing which output neuron had the highest value and is thus the predicted class. I've added a lot of inline comments to help explain everything for both the practical programmer and the programmer looking to understand the inner workings.

I didn't want to only focus on readability. I also put a lot of thought into performance. Starting with memory. You might think that instantiating one Neuron instance for each logical neuron would be very memory wasteful but it's not. I tested this with very large test networks with thousands and even millions of neurons. As the number of neurons grows and thus the number of interconnections among them, the size of the memory footprint of the network approaches 4 bytes times the total number of input weights. That's 4 bytes per floating point number, which is the common currency for this code. So if you had a network with 1,000 hidden-layer neurons and 1,000 output-layer neurons, that accounts for 1,000,000 input weights and thus the total network will take up around 4MB of memory. Which is quite compact. One thing my code does not do during training or behaving is allocate temporary arrays or collections that then go away. That saves memory and speeds things up.

The structure of my code lends itself to speedy execution too. I just configured a network for the MNIST demo with 784 inputs, 100 hidden-layer neurons, and 10 output neurons. The latter 2 layers use the sigmoid activation function. In about 75 seconds it has churned through 100k training iterations on my laptop. Which has an 8-processor, 16-core CPU set running at around 4.27 GHz. I think this is decent performance and is a result of a reasonably optimal coding. But I also added a switch to enable each layer to spread the training and behaving calculations out across all the computer's processors. In my fairly small tests this about doubles the speed. With larger networks I start seeing 7x speedups. I haven't tried it on any very large networks with millions of nodes yet. Hopefully it starts approaching a 16x speedup for 16 cores and so forth.

My code has a shabby set of demos included. One is a classic XOR gate demo. This one is great for study because it is such a small network that you can visualize the whole network fairly easily.

The second demo similarly involves synthetic data in the problem of learning to classify all of the ASCII characters from 32 (space) to 95 (tilde) as Whitespace, Symbol, Letter, Digit, or None. This network has 7 inputs representing the 7 bits needed for these characters, 10 hidden-layer neurons, and 5 output neurons representing the character classes. This one uses the LReLU activation function.

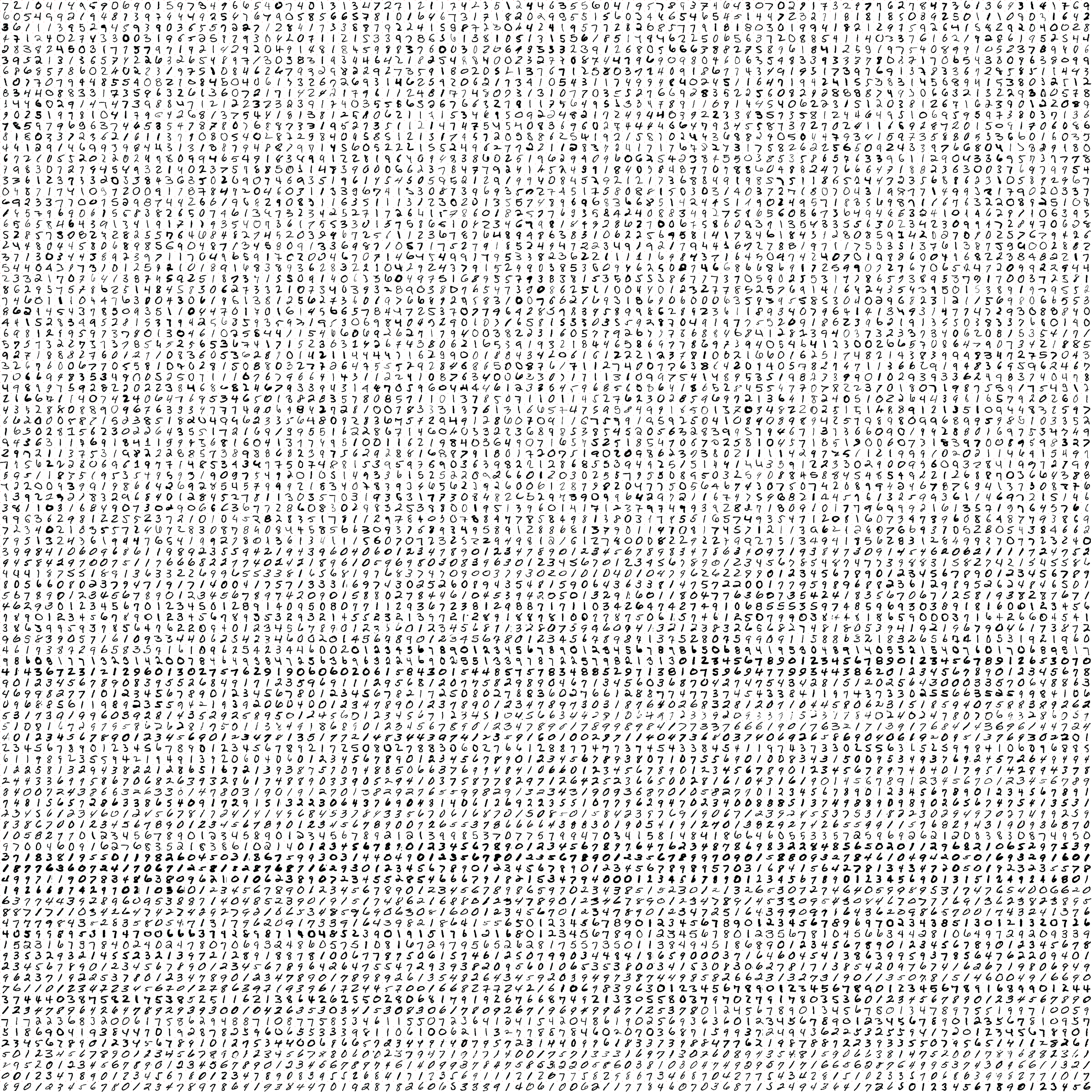

The final demo uses the aforementioned MNIST data set. The files can be found on the MNIST project page. I was frustrated at how slow loading the source data files was each time. I included a utility function to convert the training and test file pairs into pure .PNG files. Here's the smaller test image:

If you zoom in very close you will see single red pixels in the upper left corner of each digit tile. Since every source pixel was white I decided to pack the digit's value into the blue channel. In the example below you can see that the "7" digit's actual value is packed into the (255, 0, 7) RGB value.

Doing this one-time transformation of these 4 files into 2 PNGs is great. The PNG files are smaller and load much faster. And they are easier to visualize using an ordinary image viewer or paint program.

My results seem in line with those documented on the MNIST project site. I experimented with a lot of network configurations and parameter values. But for one concrete example, I configured the single hidden layer with 100 neurons using the sigmoid activation function and a learning rate of 0.1. My accuracy rate on the test set was 97.3% after 1M training iterations. That's a 2.7% error rate. The closest comparison I can see in the table of results is listed as "2-layer NN, 1000 hidden units" with no preprocessing (same as mine). The error rate on that is reported as 4.5%. That was from a 1998 paper by LeCun et al.

Quick note. You're going to need to edit this line in Program.cs to point to your own project data folder immediately under the project root folder:

static string dataDirectory = @"G:\My Drive\Ventures\MsDev\BasicNeuralNetwork\Data\";I want to emphasize that while I have worked on this code for a couple weeks and feel very good about it, I can't guarantee it is without bugs. I encourage you to comment here or reach out to me if you find any bugs, no matter how small.

I'd also welcome hearing from you about your experiences in using this code for your own projects. Cheers.

Was the network configuration experiment you did successful and is there a public use?

ReplyDelete